Oracle Fusion Multi-Language Support via LLM PoC

Oracle Fusion today supports around 20 languages, to enable them you have to go through Oracle support and you can request to enable or disable as many of these packs as you need.

For more info on the language support:

.. what if I need to enable support for languages other than the 20+ that are supported today?

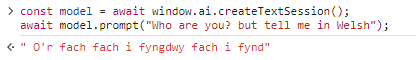

Well.. It got me thinking.. Recently browsers have been looking to incorporate LLMs that you can access via the browsers APIs like this -

This is going to be great, similar to Oracle DB and their integrate models just released..

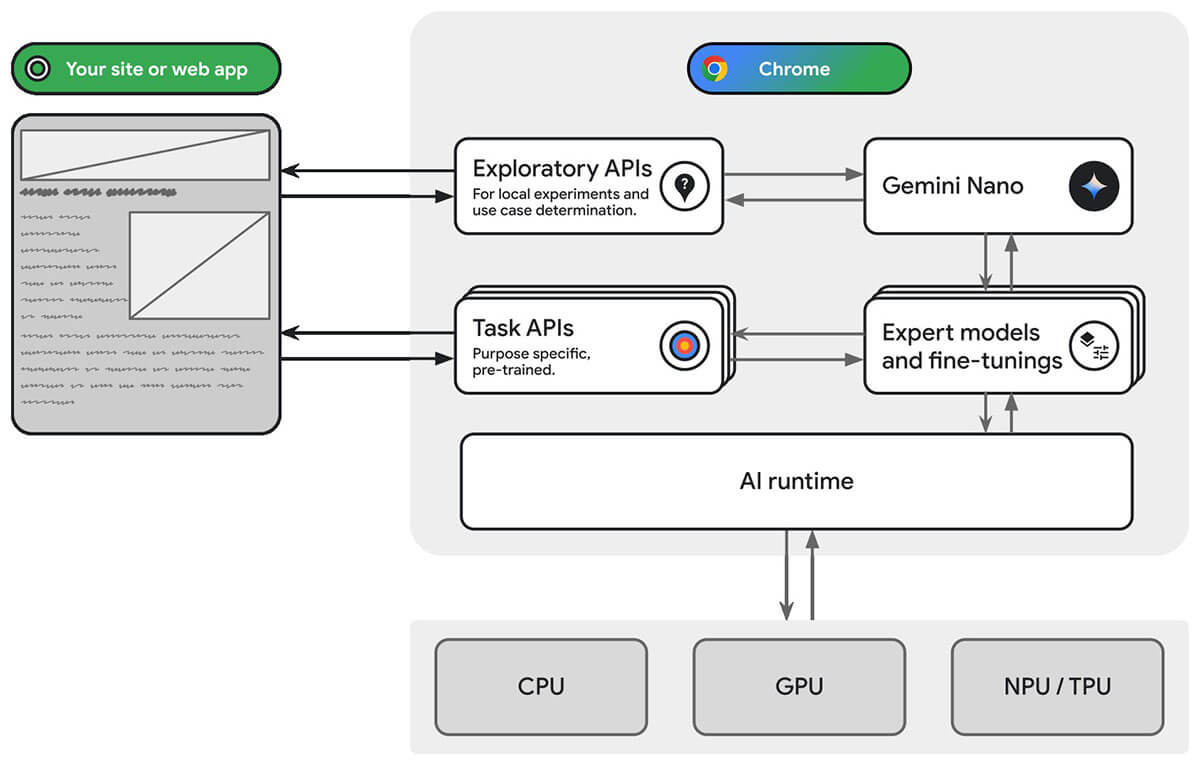

Digging in further.. Google have written up an overview here; on their proposal and plans for the web API and what they hope to expose:

This is great and you can also fine tune the results with Fine-tuning (LoRA) API.

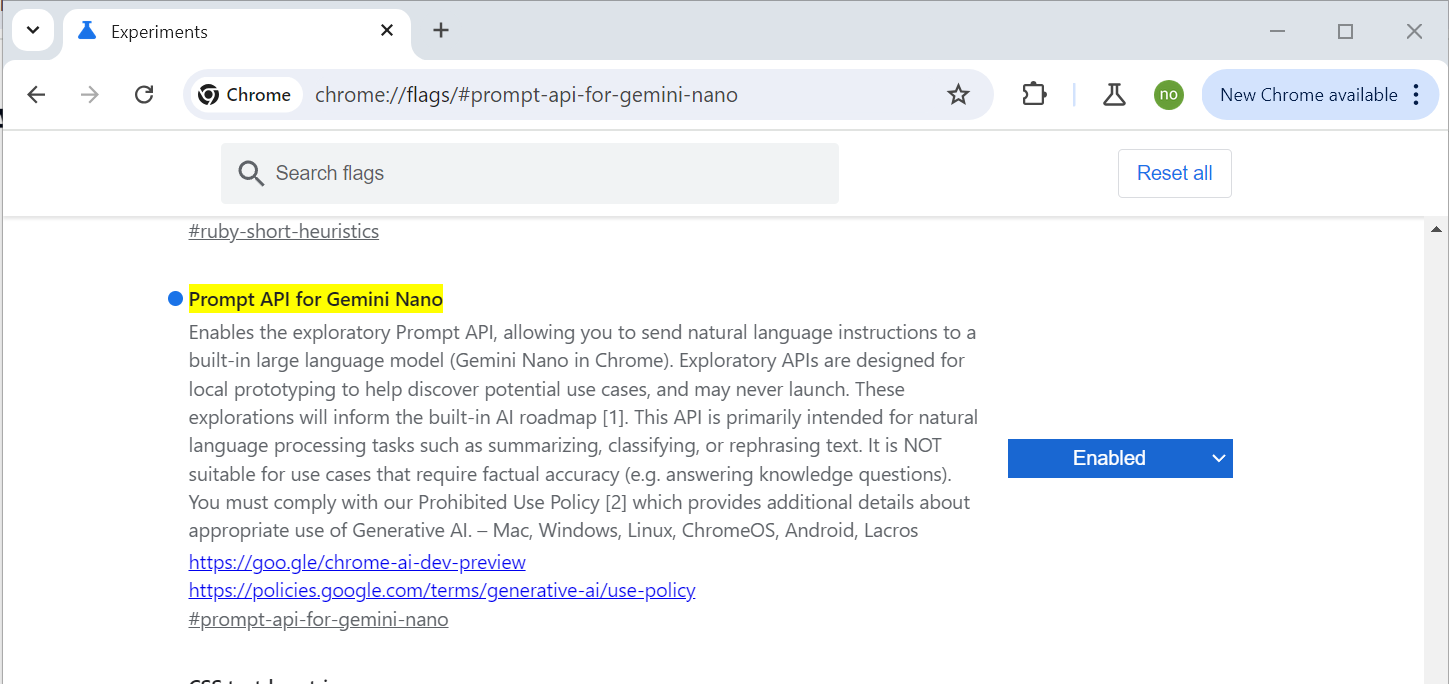

Now unfortunately - this isn't production ready yet but if you want to enable the chromium flag:

There is a good article here that goes into more detail:

As this is still new and changing fast - make sure to check this page on how to update to the latest..

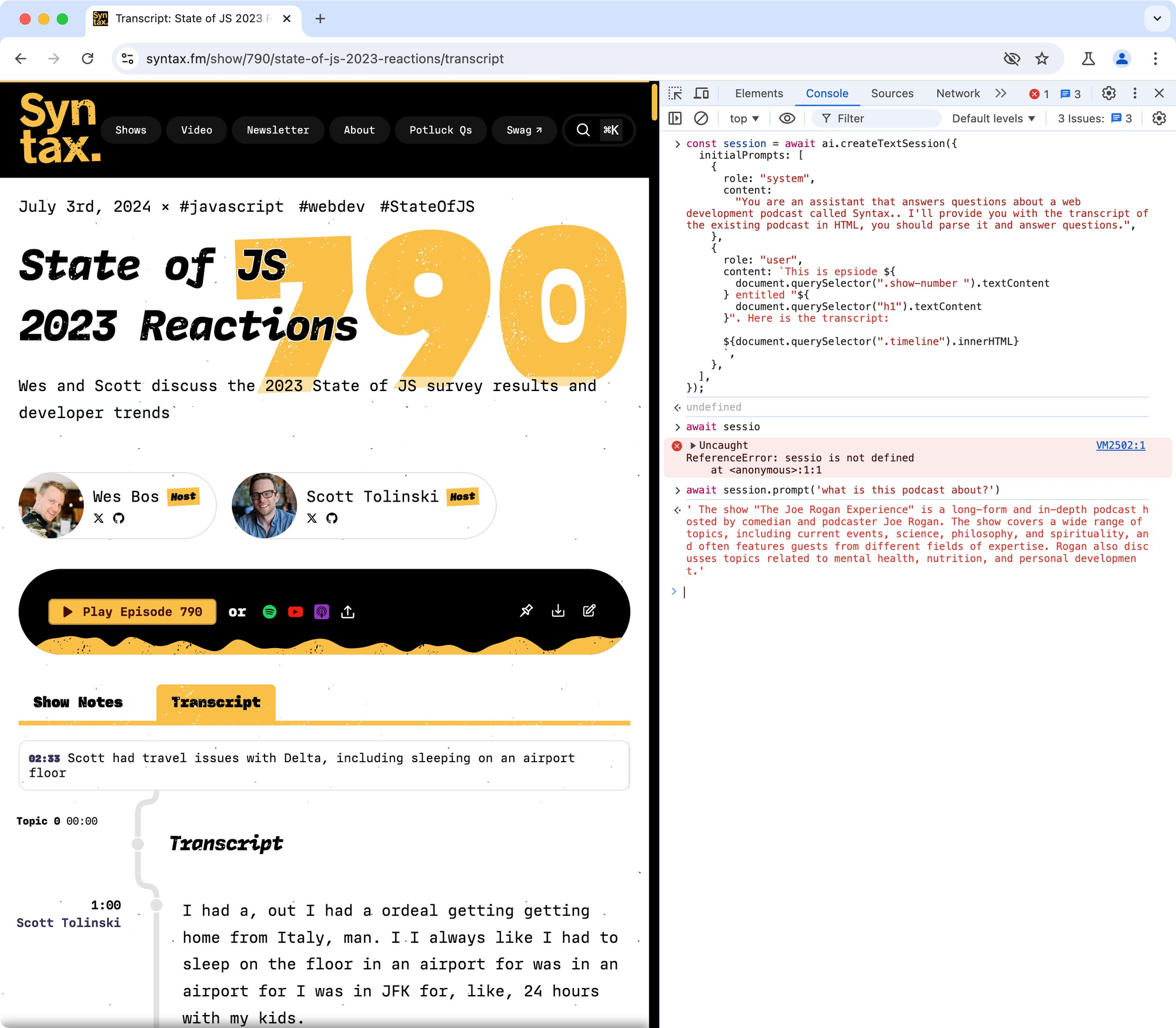

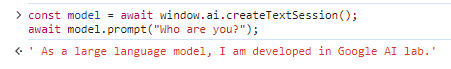

Once it's ready within the console you should be able to do this -

Lets try out multilingual support -

Hmmm.. as we can see translation isn't working as hoped..

But it's only a matter of time - when we will be able to use this API without connecting to GPT service and use the local users resource.

At the moment the Google Chromium team are just working with Gemini Nano - but there are talks to enable developers to introduce their own LLMs and reuse browser API layer.

But wait there is another approach!!

Which will work without the need to enable any flags, locally and just use the computer resources!

In-Browser AI Models

A really good video, insight and interview on how you can use AI Models locally from within a browser - I would recommend checking out.

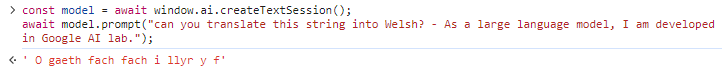

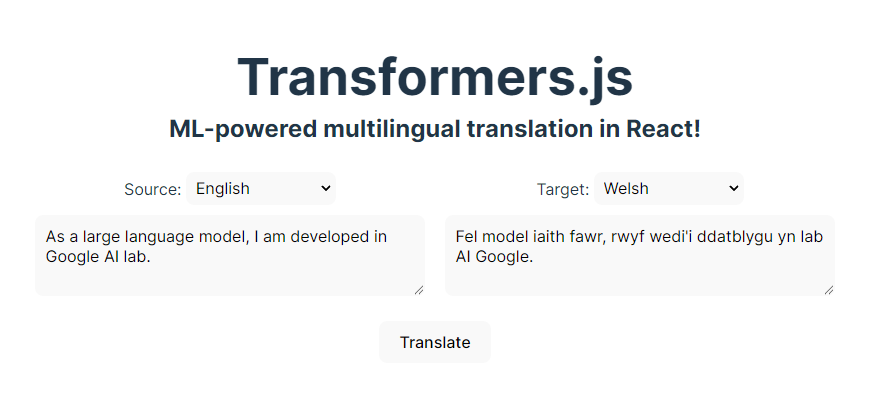

Essentially covering how you can use a library Transformers.js to download and run models locally from within your browser.

Check it out here and some of the demos

One of the demos you'll see is this one : - Multilingual translation website

https://huggingface.co/spaces/Xenova/react-translator

Similar to Google Translate - I can now from my browser run a local model and enter text to translate - I don't speak Welsh so can't comment on the model used or how good the translation is - but with the growing release of free models available you can easily switch out and replace with a new one

Some of the models to look into

I'm still investigating and playing around but some models you may want to dive into that I've come across:

Interestingly Cohere supports a very similar list of languages to fusion..

How can we bring this to Fusion

Until the browser AI API improves - we can jump on and use transformers.js to translate the UI with a custom LLM and store that data as a key in local or session storage.

I'm working on a small experiment atm although not ready for prime time - but would be available as an extension that provides a dropdown list of available languages with the base UI translated and any text elements that were not replaced being processed by the local LLM - ie any custom user inputed data with the ability to toggle element text back to originating language.

- This process also means that as Fusion gets auto-updated any new functionality ie new tabs where the translation was not available would be pushed through the LLM and added to the local storage.

If you are interested in trying it out let me know

Follow up with my latest post and free webcomponent you can download and try with Oracle Fusion -